August 20, 1852, Wednesday

Page 2 of the New York Times, 695 words

Mr. ORVILLE HATCH, of Franklin, Conn., has become insane, he having devoted considerable attention to the subject of Spirit Rappings. Mr. HATCH is a farmer, and has been instrumental in introducing many important improvements in agriculture into the town in which he resides.

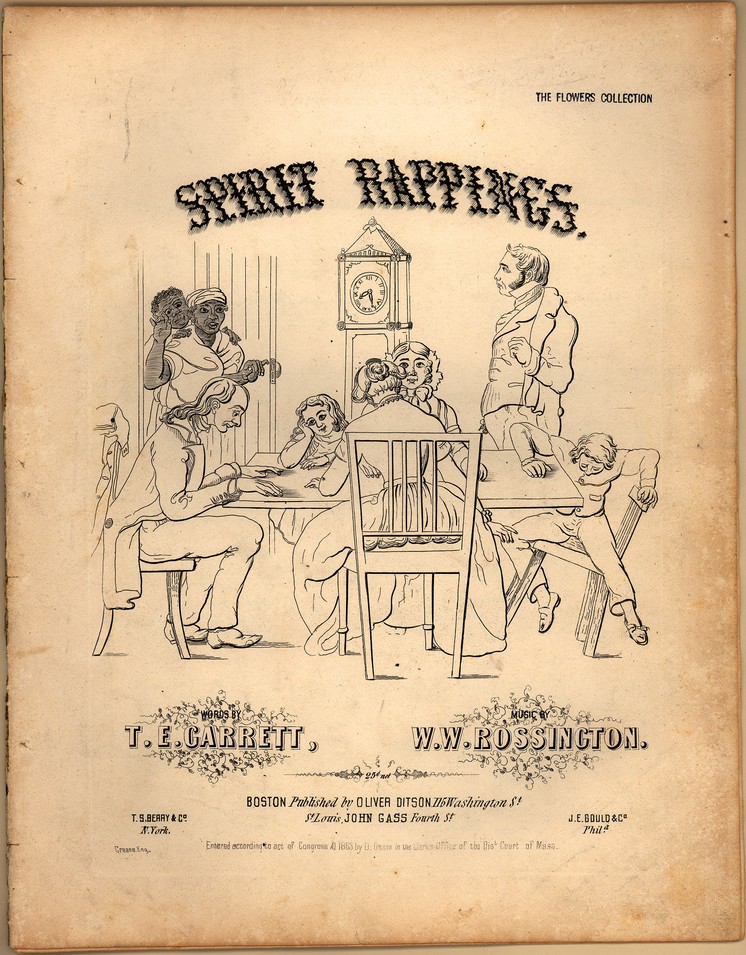

Madame Pamita, whose performances involve both spiritualism and really old American music, sent me a pointer to sheet music for an 1854 tune called “Spirit Rappings”, presumably because it’s a great number for Halloween. This post is my version of it.

Since I did a vocal part for once, the mix has the guitar and vocal parts hard panned to left and right so you can pull out the singing and do karaoke.

This recording is under a Creative Commons ShareAlike-Attribution 2.0 license. See also my boilerplate copyright statement.

Direct links:

Very cool, from story to execution. I especially liked the way you tracked it left and right, for easy remixing or karaoke.

Very nice! I am starting to get ideas about creating a new work made up wholly of your covers. . .

The story behind the tracking left and right to enable remixing and karaoke is that I’m thinking about ways for songs to contain their own source code, so that every listenable object can easily be disassembled into parts.

The model is the way that web pages always reveal the HTML, CSS and Javascript that they are made out of. This led to fast uptake of ideas and evolution of techniques as developers cherry picked the best ideas for their own creations, which were themselves available for cherry picking. In the end the web as a whole became a freakishly productive and innovative environment.

Why try to do this with music? Because the long view of musical trends that I’m getting by digging through historical archives is making me aware of the way that music evolves by cherry picking, and this is making me want to structure the musical environment to promote cherry picking.

Even though the change would be structural, the impact would be in the music itself. Weak hooks would disappear from the flow within a generation or two, strong ones would be an even bigger part of the landscape. Better arrangements would be used as skeletons for new work. And the kind of ugly horribleness that the inbreeding of commercial pop culture gives us would be wiped out faster than a race of mules.

Nice recording, the guitar sound is great (vocals too)!

Glad it works for you, Toby. That’s a Shure SM81 mic on my Gibson L3. It’s a nice combination, very clear and well balanced.

The Gibson has a really nice full sound. Goes well with the song. The mic seems to pick up the full range too. Can’t wait to hear more!

Lucas, your disassembly comments are interesting. I think the parallel you’re drawing with web source code both works and is problematic at the same time.

It works in that one can talk about both web pages and musical works as being made up of objective component parts. But, more or less, the web objects are objectively objects, and the music “objects” or only subjectively so.

Although there are different components that go into making a musical work, (unlike a web page) the music isn’t just the sum of those components–it’s more than that. And, IMHO, the “source code” of the music is also more than the collection of the sources.

So, part of what I think is an interesting possibility is seeing a musical work as having both multiple, overlapping “source codes” and multiple, overlapping “run times.”

In any case, I think it’s great and interesting that you’re creating new ways of releasing your music as much as sources for starting something new as end destinations.

Jay, I agree that the parallel with web source code is awkward.

The ability to make a two-voice mixdown its own source code using stereo panning is self-limiting to two-voice music that can be panned this way without making the music worse. There’s a musical price to pay.

For this overall way of thinking to take off, the availability of source has to do something for the listener. Maybe it would allow for context-sensitive auto mixes, like a 3-D experience. You could literally walk around in a mix that contained its sources!

3D is a fun concept. There would be a set of sources with spatial information attached to each. Maybe this is a soundscape for a walk in the forest, so there is a praying mantis by the base of a tree, a bird up in the branches, and a stream 25 feet away. The sound at any one time and in any one location is the projection of three dimensions onto the two in which we actually hear.

If you built an experience like that, the individual tracks would have to be broken out. But that’s not music, even though I *could* see that being something in games.

Can you explain more about “the music isn’t just the sum of those components–it’s more than that. And, IMHO, the source code of the music is also more than the collection of the sources.” ?

When I saw a presentation by the authors of Recording the Beatles (amazing book, btw), they played excerpts from the recent 5.1 surround mixes of the Beatles. Those mixes often had 1-2 instruments or voice panned to a speaker, and this allows one to listen to individual parts in isolation, and hear a lot more how they were recorded as well as other sounds in the studio that were otherwise buried in the original mono / stereo mixes.

I mention this just as another example of multichannel mixes allowing a different way of getting into the music–there’s definitely something to be said for this approach!

***

I might also look at what you’ve done with this song as simultaneously releasing three versions:

1. the song you hear when you play both channels at once

2. the song you hear when you play the left channel only

3. the song you hear when you play the right channel only

The fact that these versions are all in one file means different things to different audiences–to a listener on an iPod, it’s maybe inconvenient to switch between the versions; to a musician with a multitrack system, maybe it’s a convenient format to work with, etc.

But (and this gets to your question), part of what’s happening is that you are deciding on some part of your music to be the component “atoms”–and this is either arbitrary or an artistic decision, or somewhere in between. And that decision (or arbitrariness) is something people experience as listeners and/or as musicians who can build on your work.

For example, why not record every guitar string on its own track? Or, separate notes above middle C on one track, and notes below on another? Or, make each bar of a piece it’s own song?

There are a lot of ways to listen to and build upon music in component terms, and those ways are overlapping and simultaneously valid starting points for both experiencing the music and for building new / different musics.

As a musician, you give people some starting points that represent your perspective and process–but then others find their own starting points themselves, as listeners or players.

In this way, I’d see music as embodying potentials more on the order of the web (links) than of web pages (code). The source code of your music is ultimately the “links,” not just the tracks.

So, one way I’d look at what you are doing is helping people get into your music at a different level where they might discover or make new links. And, mostly what I am saying is that, with music, there are a lot of different, overlapping, levels that can work this way.

Thank you so much for the recording of this song. I am interviewing Dr. Jan Vandersand on my podcast (http://weareoneinspirit.blogspot.com/) this week and found your blog while researching for spiritualism in the 1800s.

LavendarRose

Thanks for letting me know that it was useful, Yvonne. Supporting people looking for historical info is a big goal of my project, so it makes me glad to hear that I accomplished it.

Keep in touch.